Shell scripts suffer from an issue that plagues many aspects of life. Where to draw the line? For some people a one-hundred-line shell script is too long. For others, that’s a regular day. But most people would probably agree that a 10,000 line shell script should probably have been written in a more robust programming language. So why the contention? It’s because shell makes a lot of tradeoffs that make it good for quick one-liners and dozen-line scripts that become hinderances when managing more complex problems. No one actually sets out to make a monster script, but as it grows the sunk cost fallacy rears its head and suddenly your “In for a penny, in for a pound”1. But even if you could navigate around all of the issues with shell scripting there have been decades of progress in programming language design that are being missed out on by continuing to use it.

📌 Note. The Gnu Coreutils are not the shell or bash, and shells are not terminals, though they all get used interchangeably. The Coreutils are a collection of C programs2 that provide a set of useful functions that can be executed in a command line environment. They include cat, chmod, cp, mv, ls and others. Your terminal is a program like for example LXTerminal on the Raspberry Pi, and is a vehicle to run your shell.

I’ve never been much of a shell scripting guy, but I knew a lot of people in the Bioinformatics world that were. Whether by necessity, lack of time, compatibility, or inertia their pipelines consisted of a chain of bash scripts. This never sat right with me, but that may be because I was taught to build my pipelines in Python using snakemake3. But good software needs to contend with the real world, and small scripts accrue weight as they handle edge cases, errors, and issues with compatibility. That’s just the way it goes. But even I don’t always want to reach for Python to do simple tasks if I can help it. So, is there a better way? Is there a language that is simpler than Python, wouldn’t make me feel bad if it was 500 lines long, and could better handle the unfortunate complexities that plague modern programming? Enter our contender PowerShell.

In 20164 PowerShell became available to Linux and macOS users. Built on the open source .NET Core technology its GitHub tagline proudly exclaims.

PowerShell for every system!

Microsoft agrees that having a command line is useful, but they felt the DOS era command line interpreter that shipped with Windows was holding them back. So, in 2006 Jeffrey Snover and his team began working on a new language called PowerShell that would solve this problem. Unfortunately, it took Microsoft sometime to understand the value of this, as Snover claimed he was demoted over it.

He would eventually be vindicated, as PowerShell became a huge reason why Microsoft was able to succeed in the cloud computing market, but he had to endure “5+ years of being in the doghouse” as he described it.

With it becoming open source in 2016 it became useful for other platforms that weren’t Windows. As someone who mostly has to write code for himself or a small team, PowerShell looked attractive. I decided to experiment with it by looking at it in a worst case scenario, on an RPI 4. I’ve encountered many programs that claim Linux compatibility, but don’t include my favorite hobby platform arm64, so if I could get it to work there, I would feel pretty confident about its cross platform claim.

A basic overview of PowerShell 📘

PowerShell uses cmdlets which work similar to how the Gnu Coreutils work on a Linux terminal. They are typically named in the format Verb-Noun. The language is designed to be simple and convenient and makes these tradeoffs wherever it can. It tries to “do the right thing” depending on your OS. For example typing…

set-location ~

On PowerShell in Linux and…

cd ~

on Windows both send you to whatever your Home directory is. Some other examples are…

Most cmdlets and their flags are case insensitive

Common operations usually have syntactic sugar which make them easier to type

Many commands can be tab completed

Cmdlets return objects not text, making it easier to access specific components of the returned object

Can accept file paths with “\” or “/” as separators and uses the appropriate one depending on the platform when tab completing.

get-help <command> with the flags -detailed, -full, or -online for various forms of documentation on a cmdlet

Many more.

Cmdlets can be aliased which give them shorter names that are easier to type in quick one-liners. On Windows, PowerShell aliases common Coreutils to their PowerShell equivalents.

This makes it easier to transition to PowerShell if you are familiar with Linux style shells. PowerShell used to work this way on Linux as well, but due to confusion with running Coreutils vs PowerShell aliases, that was removed.

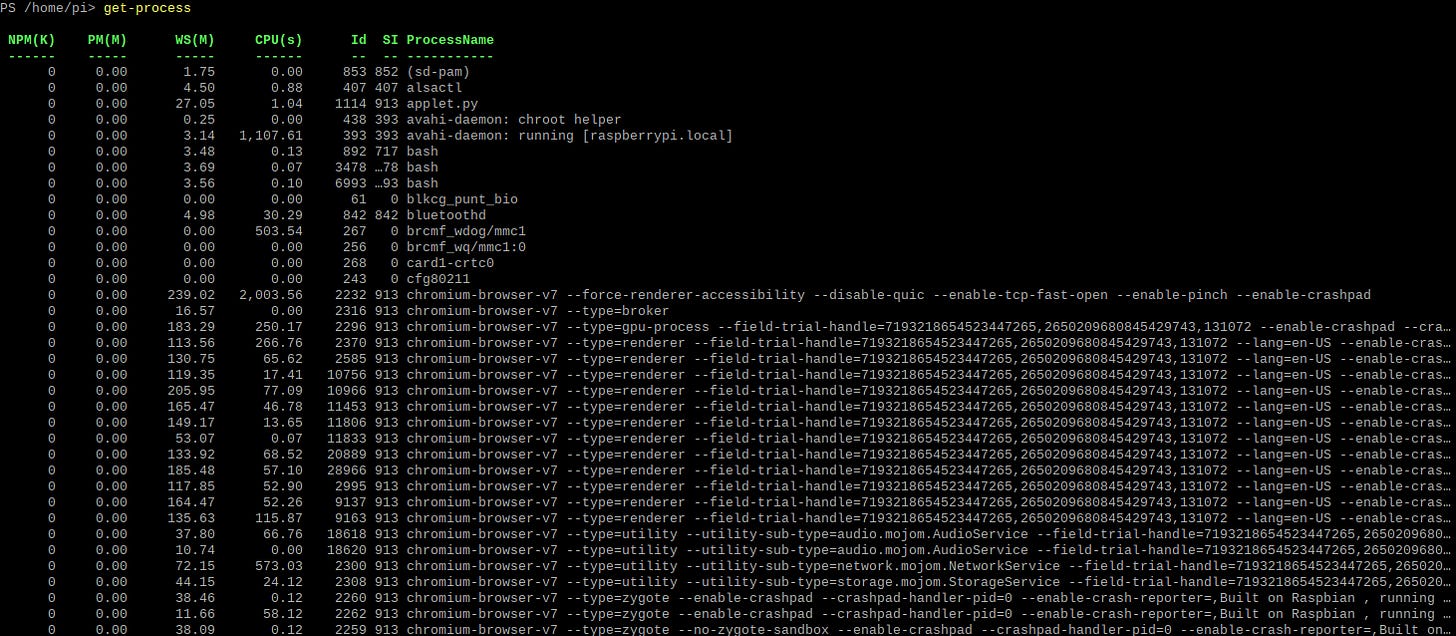

Since PowerShell returns objects when running cmdlets, you can select various components of the returned object just like any other OOP language. For example, typing get-process is the equivalent of top for PowerShell.

Typing Get-Process | Get-Member will show you lots of properties of the object. The ones you will be most interested in are the ones with property in the name.

These can be easily accessed with either the select cmdlet or the dot operator. For example, if I wanted to just select the processName of each process using get-process I can type

(get-process).processNameAnd if I want to select the ProcessName, SI, ID, and CPU in that order I can do…

get-process | select processname, si, id, cpuNo trying to slice up strings, and nicely formatted output, Nice.

Examples using PowerShell 💪🐚

Now that we have a basic overview of how PowerShell works let’s see how it can solve some simple tasks. But first, downloading PowerShell on the Raspberry Pi. Turns out this isn't as hard as I thought it would be, and Microsoft actually has an Installing PowerShell on Raspberry Pi OS page5. So, with that out of the way, let's dive into some examples.

Downloading a single file from the web

Downloading structured data from urls is a common operation I need to do, and luckily PowerShell has a way of doing that with the Invoke-WebRequest cmdlet.

Invoke-WebRequest -Uri "https://github.com/mwaskom/seaborn-data/raw/master/penguins.csv" -OutFile "penguins.csv"

This is a mouthful but between fuzzy matching on cmdlet options, and aliases we can shorten it. Aliases are shortened forms of the cmdlets that come at the cost of comprehension. PowerShell’s fuzzy matching allows you to partially type in an option for a command, and if the partial option resolves to a unique command it will work as intended.

iwr -ur github.com/mwaskom/seaborn-data/raw/master/penguins.csv -o penguins.csv

From 112 characters down to 79, just 70% of the original characters. One of my former bosses used to tell me…

We each have a finite number of keystrokes in our lives. Use them well

He would be proud. For the sake of this article, I’ll continue using non-abbreviated commands, but keep in mind that most of these commands can be shortened.

Various CSV operations

As a Data Analyst, I live and breath CSVs. When using bash, I often struggle to use cut, grep, and sort effectively to get data out of them. PowerShell has some good tools for working with common data formats and that includes CSVs so I was interested to see what that workflow looked like.

Quickly view a nicely formatted csv

Sometimes I don’t want to open up the csv in Excel/LibreOffice Calc to look at its contents. The ConvertFrom-Csv and Format-Table cmdlets come to the rescue. This one-liner prints out a nicely formatted table with just the first 10 lines othe CSV.

Get-Content penguins.csv -Head 10 | ConvertFrom-Csv | Format-Tableadding the -Head flag keeps it manageable when the files are very large.

Unique values in a column

Getting the unique values in a specific column is useful when determining what values I need to account for in my scripts. Since CSVs can be interpreted like objects, getting this information is simple. By using Import-Csv we can have PowerShell read the CSV and create a CSV object. Then we can use the dot operator to get the .Species property on the CSV object to get the species column. We can then pipe to our Sort-Object cmdlet to get the unique species.

(Import-Csv penguins.csv).Species | Sort-Object -UniqueRows that match a specific value

Sometimes I’m only interested in a part of a CSV where rows match a specific value. In those cases I can use the Where cmdlet which allows me to filter the data in a style reminiscent to SQL.

Get-Content "penguins.csv" | Where {$PSITEM -match "Gentoo"}$PSITEM and $_ can be used interchangeably (though the more verbose $PSITEM is preferable in official scripts). They are automatic variables and refer to the current object the pipeline is interacting with.

Create a csv subset by a specific value

This one is particularly helpful. After filtering my csv in PowerShell I’d like to be able to put the output in a new file. Thankfully along with an Import-Csv cmdlet there is also an Export-Csv cmdlet and it does what you’d expect.

Import-Csv -Path penguins.csv | Where { $_.species -eq "Gentoo" } | Export-Csv -Path output.csv -NoTypeInformationOne note that tripped me up is exporting the csv with the -NoTypeInformation option. If you don’t use this flag, you end up getting an export that looks like this. Which is not what you want.

"PSPath","PSParentPath","PSChildName","PSDrive","PSProvider","ReadCount","Length"

"/home/pi/penguins.csv","/home/pi","penguins.csv","/","Microsoft.PowerShell.Core\FileSystem","222","39"

"/home/pi/penguins.csv","/home/pi","penguins.csv","/","Microsoft.PowerShell.Core\FileSystem","223","35"

"/home/pi/penguins.csv","/home/pi","penguins.csv","/","Microsoft.PowerShell.Core\FileSystem","224","39"

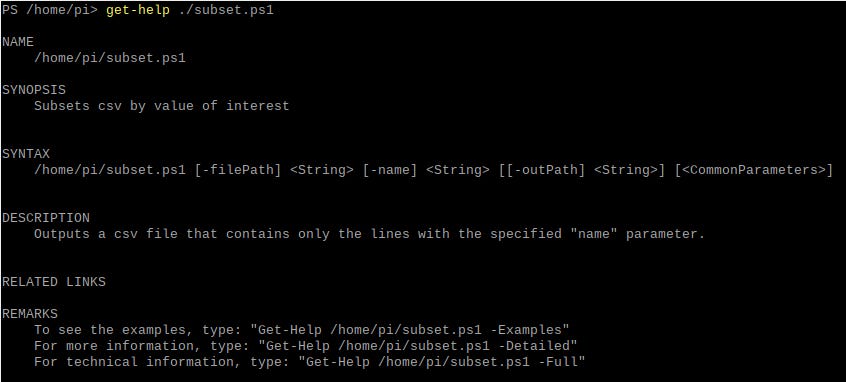

This PowerShell one-liner is actually so useful to me, that I converted the one liner into a PowerShell script complete with documentation.

<#

.SYNOPSIS

Subsets csv by value of interest

.DESCRIPTION

Outputs a csv file that contains only the lines with the specified "name" parameter.

.PARAMETER filePath

The csv file you would like to subset

.PARAMETER name

The value you want the csv line to contain

.EXAMPLE

./subset.ps1 -filePath penguins.csv -name Gentoo

#>

param(

[Parameter(Mandatory=$true)][string]$filePath,

[Parameter(Mandatory=$true)][string]$name,

[string]$outPath

)

$data = Import-Csv $filePath

$content = $data | Where { $_.species -eq $name }

if ([string]::IsNullOrEmpty($outPath))

{

$baseName = [System.IO.Path]::GetFileNameWithoutExtension($filePath)

$OutPath = "${baseName}_subset.csv"

}

if ($content -eq $null)

{

Write-Host "No rows in csv matched $name"

} else

{

$content | Export-Csv -Path $outPath

Write-Host "Writing output to $outPath"

}And since PowerShell has nice syntax for documentation using comments, I can type get-help ./subset.ps1 and I get.

If I want to see an example, I can just type get-help ./subset.ps1 -Example

Since it's a script I use a few new features. For example, adding custom arguments that are used when calling the script, specifying which are mandatory, and declaring their types using datatype accessors6.

param(

[Parameter(Mandatory=$true)][string]$filePath,

[Parameter(Mandatory=$true)][string]$name,

[string]$outPath

)Storing results in a variable

$data = Import-Csv $filePathand most interestingly, calling out to C#. In this line I call out to the static method IsNullOrEmpty on the C# string class7.

if ([string]::IsNullOrEmpty($outPath))and on this one, I utilize the function GetFileNameWithoutExtension from System.IO.Path.

[System.IO.Path]::GetFileNameWithoutExtension($filePath)This is great, as I can leverage thoroughly tested code from C# while accessing them through a PowerShell flavored escape hatch.

This is a prime example where a useful one liner can be expanded to into its own documented script. PowerShell has tools to make the code more comprehensive, and when we want to do something that isn’t built in, we can leverage the power of C# only for the specific parts where it’s lacking, without having to context switch.

And other formats as well!

PowerShell has the ability to work with a wide variety of formats natively out of the box on Linux. You can see them in the screenshot below…

Getting the size of a file

When working with large files on the command line, I often wonder about their size. This is typically reported in bytes and can be accessed in PowerShell like this…

(get-item penguins.csv).size

Since I’m too lazy to convert this into a useful size in my head, PowerShell has some handy constants built in called 1KB, 1MB, 1GB, 1TB, and 1PB, that enable my laziness.

(get-item penguins.csv).size/1mb

This produced the same output but this time in Mb.

Processes

Getting Processes by memory

Seeing which processes are hogging all my memory is a snap using get-process and sort-object

get-process | sort-object WS -Descending

Killing a process

Killing a process is done with stop-process. No need to fiddle with kill, pkill, and killall. By simply typing…

get-process | where processname -eq <myprocess>| stop-processyou can kill any process. Using -match allows regexes. There are more examples I could show but I think I’ve convinced myself. PowerShell does have the capability to become the default shell in my workflow.

Don’t forget the Oil ⛽

I would be remiss if I didn’t talk about Oil shell which is also aims to be an improved shell8. It's important to get right what Oil is and is not. According to the website, Oil is two languages. A Posix compliant shell (OSH), and the Oil programming language. The two work together to ease the transition from a more standard shell language to an upgraded scripting language (Oil) that is free of many shell's language warts. While I haven't used Oil personally, from a first glance I think the language does one major thing better than PowerShell and it even says as much on the website's homepage.

It's our upgrade path from bash to a better language and runtime.

If you just want a better shell experience, Oil is a great alternative that has spent a lot of time making itself feel native on Linux. The reality is that Linux is built around text, not objects, and that sometimes clashes with the way PowerShell does things. So why use PowerShell when you can use Oil? I think PowerShell’s cross platform story is better when one of your targets is Windows. Since I work regularly on the Big 3 (would love to add Bsd at some point!) PowerShell is great for my needs. But I would encourage you to give Oil a look as well!

What’s the point?

There was never any doubt that shell scripting is useful and productive. The one-liners can make lots of tasks quicker than breaking out a “real” programming language. But why PowerShell over something like bash? Especially on Linux? Well, if you already know Bash and aren’t allowed to put PowerShell on your environments there is no point. But if you have a little more control over your environment and are looking to improve your own workflows there is a stronger case. Let’s go over some pros and cons.

Pros

If you already know PowerShell then using it on Linux is a no brainer

Working with objects is easier than working with strings

An easier cross platform story. Linux Sys admins on Windows and Windows Sys Admins on Linux can navigate in ways they are familiar with

A rich set of cmdlets which can be extended with C#

Benefits from a lot of modern conveniences that were learned over 15 years after bash was created

Leveraging functions in C# to avoid having to reinvent the wheel

Cons

A Microsoft language that contains lots of Microsoftism I.E extending cmdlets with C#.

Will not always be available on all Linux systems

Linux is not designed to work with objects by default

Yet another dependency

Can’t use sudo in PowerShell. Must start PowerShell with sudo

Even with aliases, the language is verbose, and feels better written in a text editor

With all that said I still think it’s worth giving it a try. Microsoft doesn’t have the best reputation for everyone though, so I understand if you’re probably thinking I’m some sort of PowerShill right now…

But I have not actually been sponsored by Microsoft. Anyway, I'm interested to hear what you think. Do you use PowerShell as a replacement shell? What are your thoughts on the language? Do you use Oil? Tell me how you like it. Finally for those of you who made it this far take a look at these cheesy PowerShell Hero Comics9 if my pun didn't get you to crack a smile, maybe they will 🙂.

Call To Action 📣

If you made it this far thanks for reading! If you are new welcome! I’ve recently started a Twitter and would love for you to check it out. If you liked the article, consider liking and subscribing. And if you haven’t why not check out another article of mine! Thank you for your valuable time.

“In for a penny in for a pound” is an English idiom. It means to see an action through until the end regardless of the consequences