Why we need more programming languages

Don't we have enough already?

I came across a post on the internet whose message was along the lines of…

Why do we keep inventing new programming languages?

Learning new syntax is a huge time sink for programmers

It’s hard to find people who are experts in my multi-language stack, therefore new languages make this worse.

These are fair question to ask, and I’ll do my best to answer them now.

Punched Cards 🥊

We’ve been using the idea of a “Punched Card” to store data since back in 1725 when Basile Bouchon figured out he could control a loom with punched paper tape. Fast forward 250 years, I was able to find examples of punch cards for computer use dating to at least 1980 with the ISO 6586:1980 standard for the Implementation of the ISO 7- bit and 8- bit coded character sets on punched cards1. By most estimates punch cards fell out of favor by the mid 1980s and makes the technology about 300 years old today.

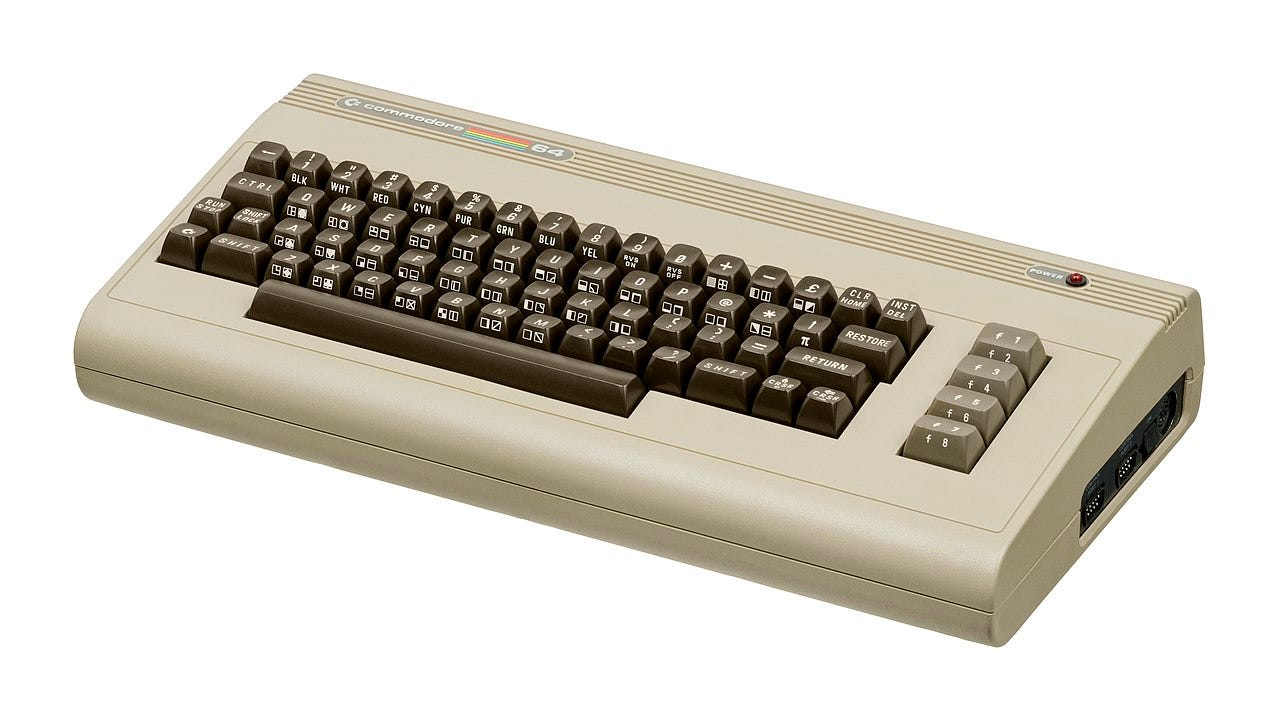

While I’m sure someone can find an example of punch cards still being used for some critically important system today, it’s safe to say modern programming does not involve punch cards in any meaningful way. That would mean that modern programming, which I’m defining as typing a program into a computer with a keyboard, saving the program to disk, and executing said program, has been the dominant form of programming since at least the 1980s, but has been around for much longer than that. It was also in the 80s where we began to see a convergence in the power of computers, and the accessibility of computers in both hardware and software. Think computers with 64K of memory. This meant more software written in a language other than assembly, without the need for specialize hardware to run said languages (like Lisp machines), and at computer sizes and prices accessible to people. This was the era of the IBM PC, Commodore Vic-20 and 64, Apple IIe and Macintosh, ZX Spectrum and many others. These computers were a lot of peoples first experience with programming, and thankfully they didn’t require punch cards. That would make the modern programming era less than 50 years old.

Most of us have learned about the three-age system of human history which included the Stone Age, Bronze Age, and Iron Age. The Bronze Age were we first started using tools made of metal to the Iron age covers a period from 3300 B.C to 1200 B.C or roughly 2000 years. Take any of the old professions, Woodworking, Leatherworking, Clothes Making, Animal Husbandry, and you can find periods of hundreds to thousands of years where the way it was done was pretty much the same. While it is true that human technological advancement has been increasing at a rapid pace since the industrial revolution in the 18th century, it would be hard to argue that post industrial revolution jobs haven’t benefitted from the couple hundreds of years of experience we’ve gained since it began. The same could be said for the thousands of years of experience we’ve gained in professions invented before the industrial revolution.

So how does this relate to creating new programming languages? We haven’t even scratched the surface of what a modern programming language can do, and what programming language theory can discover for us. Even if we look at modern features that are still making their way into programming languages today that list includes…

Generics

Pattern matching

Optional Types

Nullable Types

Type Inference

Gradual Typing

Hot reloading

Async Await

First class functions

Algebraic Types

As an example, Go didn’t get generics until version 1.182 which at the time of writing this article, was last year. People still get excited for new features being added to their favorite language today, though maybe less so from the C++ crowd. C++ is actually a good example of a language that continues to add features, but lots of features are to replace old ways of doing things, make up for its warts, or try to make it safer. It was a pioneer in reinventing systems programming languages but has accumulated a lot of dead weight in the process. It's actually impressive how much they are able to add to the language each standard, but it that has a cost. I remember someone on Hacker News asking why lambdas in C++ look the way they do, and the response was "Because C++ didn't have any other available syntax". New languages can benefit from all of the advances in programming language theory, but don't have to worry about being backwards compatible going back decades. This greatly simplifies new languages.

The longer a programming language has been around, the more difficult it is to use new features. C++s age means most projects you work on in the real world don't get to use the features of the last 5-10 years that are supposed to make lives easier, and programs safer. This creates a level of friction in C++ projects that has fed up people to the point where they leave the language entirely or make their own. These problems are not only experienced by C++, but other similar aged languages as well.

Learning new Syntax is hard🗿

I find this argument as a point against new languages strange. Most programming languages use the same style of syntax so once you become familiar with the style, all languages that use that style are not hard to understand. You’ve got your curly braced C like languages like C++, Java, JavaScript, and C#, your parenthesis-based languages like Scheme and Common Lisp, your whitespace-based languages like Python, Nim, or Julia, your Smalltalk like languages like Pharo, Ruby, and Crystal, and whatever the heck APL is3. The point being is that once you know a few different languages, you'll have basically pareto principled4 the syntax of the most common programming languages.

Combine that with a little Google and Stack Overflow and you’ll be quickly hacking away at a new language in a matter of days to hours. And with tools like ChatGPT now available, it’s super simple to just give it a block of code in the language you understand well, and ask it to spit it out in the language you are learning. And whenever you make a mistake, just paste the code into the chat box, along with the error and it will usually point you in the right direction, assuming you aren’t programming in a language it doesn’t really know like Simula.

The argument that goes hand in hand with this is that learning a programming language is like learning a new language. While learning a programming language is technically like learning a language, it has a lot less in common with natural language than you think. There is a reason we don’t write programs in full on English.

It’s verbose

It’s unnecessarily hard to parse

It’s not context free

We program in a context free grammar because we need to provide a formal and unambiguous way to specify the syntax and structure of the language. I think the closest we’ve ever gotten to that in spoken language is Lojban5 a constructed language that is syntactically unambiguous, meaning phrases like “I saw the man with the telescope”, which can be interpreted in more than one way, can’t happen. So, if you have more than a year or two of programming under your belt, and you've dabbled with at least one other language, learning a new one should be a non issue.

Finding an expert in your stack🤔

The other argument that frequently comes up against new programming languages is that it fragments tech stacks. But the reality is today that very few programmers have the luxury of just programming in a single language anyway. This has been true for a long time. Even before we had the explosion of languages we have now, we had to contend with different flavors of assembly that were usually incompatible across computers. Here is a video of Bjarne Stroustrup talking about how 5 languages is a good number to know, and this was 11 years ago.

So, unless you work exclusively with the web, or do microcontroller work, chances are you will have to interact with more than one programming language. And besides as someone who has hired multiple people, it’s hard to find a person that is an expert in exactly “your stack”. More often than not we get someone that is only close enough. Maybe if I worked for a company like Google we’d have a glut of perfectly qualified people, but I suspect my hiring experiences are not unusual. And unless your company only uses open-source technology, you will probably have some secret sauce that no one being hired will know, so there will always be an onboarding process. In my experience the biggest points of friction in onboarding have always come from the undocumented behaviors of a product, the institutional knowledge locked up in some person’s head, or the technical debt, not the programming languages themselves.

I hope this article has given you an overview of why we still need new programming languages today, and why learning new programming languages is a skill that gets easier. When new features in a language like f-strings in Python come out, my life gets a little bit easier. When new languages like Zig, Rust, Go, or Crystal come out, they reinvent not just a programming language, but provide a useful suite of tools to make programming easier. I’m excited to see what awesome new programming languages we develop in the next decade, and I can’t wait to see what awesome tooling comes with it as well. So, keep developing programming languages language designers, and maybe one day you’ll create the next big one.

Call To Action 📣

If you made it this far thanks for reading! If you are new welcome! I like to talk about technology, niche programming languages, AI, and low-level coding. I’ve recently started a Twitter and would love for you to check it out. I also have a Mastodon if that is more your jam. If you liked the article, consider liking and subscribing. And if you haven’t why not check out another article of mine! Thank you for your valuable time.

The Pareto Principle, also known as the 80/20 rule was created by Vilfredo Pareto. It is used to describe how most work can be completed with a small amount of effort, but a complete system requires a disproportionate amount of effort for that remaining small chunk