The Evolution of Computing: From 8-bit to 64-bit

Computing technology has undergone significant transformations since its initial accessibility to the masses. One particular evolution that stands out is the shift from 8-bit to 64-bit computing architectures. I still vividly remember my first encounter with these terms during my childhood, while playing on my father's Sega Genesis. There was a white "16-bit" label adorning the front of the console.

At its core, a "bit" represents the smallest actionable unit within a computer, taking on values of either 0 or 1. When four bits come together, they form a "nibble". By switching some of those bits on or off, we can represent a total of 2^4 (16) distinct values. Doubling our nibble gives us a "byte," a unit comprising eight bits, capable of expressing a whopping 256 different values.

In the early days of computing, the alignment of bit lengths to powers of two wasn't always a given. Nonetheless, many early computers embraced these dimensions. The highest value a CPU could address in a single operation is usually referred to as a "word." For instance, the Motorola 68000, a 16-bit CPU, was equipped with a 16-bit word size. As technology surged forward, 32-bit CPUs naturally sported a 32-bit word size, and the pattern persisted through subsequent progressions.

Understanding these nuances not only unveils the layers of computer architecture but also shines a light on the boundless innovations that have driven technology to where it stands today. As we reflect upon the journey from 8-bit computers to modern computing powerhouses, it's clear that every "bit" of advancement has shaped the landscape we navigate.

8-Bit Computers

Our bits journey begins in the late 1970s and early 1980s with the advent of 8-bit microprocessors. These early systems, like the Intel 8080 and the MOS Technology 6502, laid the foundation for personal computing. With limited processing power and memory addressability, these computers were primarily used for basic tasks such as word processing, simple games, and basic programming.

Despite their limitations, 8-bit computers sparked a revolution by making computing accessible to the masses. The iconic Commodore 64, for instance, became one of the best-selling home computers of all time, showcasing the potential of computing beyond business and scientific use. Some other popular 8-bit computers of the day were…

Apple II

Atari 400

Sinclair ZX Spectrum

BBC Micro

Besides business software, these 8bits CPUs powered some of the most popular games of the day. This included games like…

Tetris

Pacman

Pitfall!

It's hard to believe, seeing what we have now, that we could do anything on these computers. Specifications varied but using the popular Commodore 64 as a metric, 8-bit computers had these specs…

CPU: ~1Mhz

Memory: 64Kb

Storage:5 ¼" Floppy Disk @ 170KB

Not much by today’s standards, but look no further then the Demoscene to see what amazing things people have been able to do with them.

The transition to 16-bit

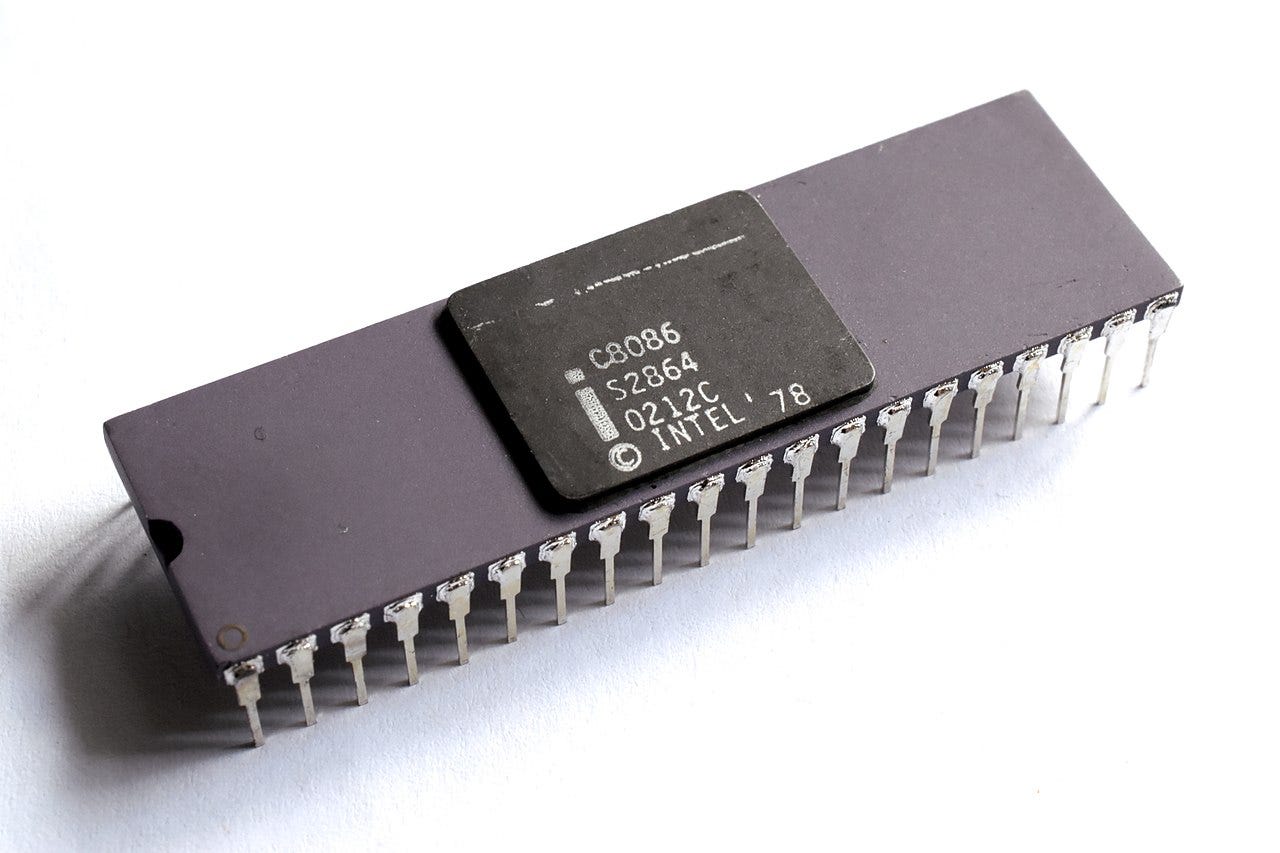

As technology advanced, so did the computing architectures. The 16-bit era, exemplified by processors like the Intel 8086 and Motorola 68000, brought improvements in processing power and memory capacity. This led to the rise of more complex applications, better graphics, and improved multitasking capabilities. The time period between when we saw the first 16-bit personal computers and the first 32-bit computers was very short, as the pace of innovation was quick during this time. Even before the advent of 8-bit personal computers in the late 70s and early 80s, a select few were using Minicomputers that were already 16-bit by the early 70s. For example, the PDP-11 that Ken Thompson was working on while developing Unix was 16-bit. Many of the 16-bit personal computers also had more powerful, but also more expensive, 32-bit variants that came out around the same time. This blurred the timeline between 16 and 32-bit computers. Just focusing on 16-bit computers we have…

The IBM PS/2 (the 16 bit varieties)

Tandy 2000

Many of the computers used for Nasa's Space Shuttles

The 16-bit era coincided with the fourth generation of gaming consoles. They came a little later than their computer counterparts (1989 and 1991 respectively) but were cheaper. This was the era of the Sega Genesis and Super Nintendo Entertainment System. Some popular games were…

Super Mario World

Sonic the Hedgehog

Chrono Trigger

Aladdin

As many computers were 16/32-bit hybrid machines it's difficult to pin down some common specs for the typical 16-bit computers. But since the Sega Genesis was a popular console, we will proxy a computer of that time using it's specifications

CPU: 7.6 Mhz

Memory: 64KB RAM + 64KB of Vram + 6KB of Audio Ram

Storage: 256 KB to 4MB

Between the Commodore 64 released in 1982, to the Genesis released in 1989, we experienced a 7x jump in processing power. But this was only just the beginning

32-bit is here to stay

The mid to late 1980s marked the 32-bit era. This period was catalyzed by processors like the Intel 80386 (also referred to as the 386), which made its debut in 1984, and the Motorola 68030. These processors ushered in a new era of performance, enabling them to handle larger memory capacities. Simultaneously, this era bore witness to the emergence of graphical user interfaces (GUIs), a pivotal step that laid the foundation for the familiar modern computing experience that we enjoy today. To illustrate this point we had Wolfenstein 3D, released in 1991 by ID Software, a popular first-person shooter that was optimized for Intel's 386. Sporting clock speeds ranging from 12 to 40 MHz, this CPU provided the backbone for early 32-bit computers. These machines typically boasted specifications that looked like this

CPU: 20-40 MHz

RAM: 8MB

Storage: 200MB

The early 2000s marked the logical conclusion of the 32-bit era. Spanning a remarkable 18 years, this phase witnessed a comprehensive overhaul of every aspect of computing, resulting in substantial leaps in power and performance. While the era predates the advent of multi-core CPUs, typical specifications during the end of this era were as follows

CPU: ~3 GHz

RAM: 2-4 GB

Storage: 80-250 GB

The 32-bit era maintains its intrigue today due to the continued existence of programs designed solely for 32-bit environments. Notably, Valve's popular game client, Steam, remains a 32-bit program. However, the contemporary landscape has brought about challenges. For instance, as of the time of writing, Steam's 32-bit nature has led to complications with the latest Ubuntu version. Many Operating Systems have dropped, or sunsetted 32 bit support. macOS High Sierra 10.13.4, released in early 2018, saw Apple withdrawing support for 32-bit apps. Microsoft also followed suit, discontinuing 32-bit versions of Windows in their May 2020 update. But hold on, you might be wondering, "Can't 64-bit CPUs run 32-bit programs? Why does it matter if 32-bit mainstream CPUs are no longer prevalent?" You're correct dear reader, but the messy world of software doesn’t always make this viable. Here are a couple reasons why that isn’t always the case

System Libraries and Dependencies: When a 32-bit program is designed and compiled, it often relies on 32-bit system libraries and dependencies. These libraries provide essential functions and resources that the program needs to run. On a 64-bit operating system, there are both 64-bit and 32-bit versions of these libraries. However, if the 32-bit version of the library is missing (because an OS doesn't ship one by default) or not properly configured on the system, the 32-bit program might not work correctly or might not work at all

End of Support: In some cases, software developers have chosen to drop support for 32-bit programs. This could be due to reasons such as the need to focus resources on improving 64-bit versions, security concerns, or the desire to move forward with modern technology standards. Eventually, as your computer is updated, this might introduce incompatibilities with your previously working 32-bit program. If the developer has decided not to support the 32-bit version of the program anymore, this will never get fixed

So, while 64-bit CPUs can technically run 32-bit programs, the compatibility issues arise from the availability of required libraries, system policies, and software development decisions. Users might need to take specific steps to enable 32-bit support on their systems, but many won't, effectively phasing out these programs.

The Revolution of 64-bit Computing

Correction: MIPS Technologies, SPARC, and Hewlett-Packard, all had 64-bit implementations before Intel and AMDA Thanks Pete Windle

We presently find ourselves within the domain of 64-bit computing, an epoch that originated with the advent of the first 64-bit processor, Intel's Itanium, introduced in 2001, and subsequently AMD's Opteron in 2003. Though these initial processors were predominantly tailored for enterprise applications, the broader introduction happened with AMD's Athlon 64 series in 2003 and Intel's Pentium 4 series in 2004. An interesting thing happened during this period. Intel's 64-bit CPUs were incompatible with the preexisting 32-bit instruction set architecture (ISA). This contrasted with the 32-bit ISA's debut, which exhibited substantial backward compatibility with 16-bit programs. To understand why this is we have to go deeper.

An instruction set is a collection of machine level instructions that CPU can execute. These instructions define the basic operations that the CPU can perform, such as arithmetic operations, data movement, logical operations, and control flow. Each instruction in the instruction set is represented by a binary code that the CPU understands. When a program is compiled or assembled, high level programming language instructions are translated into these machine level instructions that the CPU can directly execute. The difference between a 32-bit instruction set and a 64-bit instruction set (and indeed any of the previous ones) lies in the length of the binary code used to represent instructions and memory addresses:

32-Bit Instruction Set

In a 32-bit ISA, each instruction is represented using 32 bits (4 bytes).

Memory addresses in a 32-bit ISA are also 32 bits in length, which means the CPU can directly address up to 4 GB of memory (232 memory locations).

The 32-bit instruction set can represent a limited range of memory addresses and numerical values compared to a 64-bit instruction set.

64-Bit Instruction Set

Correction: MIPS Computer Systems, SPARC, and Hewlett-Packard, all had 64-bit implementations before Intel and AMD. Thanks Pete Windle

In a 64-bit ISA, each instruction is represented using 64 bits (8 bytes).

Memory addresses in a 64-bit ISA are 64 bits in length, allowing the CPU to directly address multiple orders of magnitude more memory, at 264 memory locations (16 Exabytes), compared to 32-bits 232. The 64-bit instruction set provides a larger addressable memory space, allowing for more efficient handling of very large datasets, complex applications, and enhanced performance in certain scenarios, like being able to pack two 32-bit instructions in a single 64bit instruction.

Armed with this understanding, we can now delve into some programming minutiae. When encountering program descriptions such as x86 or Win32, it signifies 32-bit versions of a program. Given the pivotal role of Intel's 80386 as the inaugural 32-bit CPU, the 32-bit architecture is also sometimes referred to using the last three digits of the CPU’s designation, prefixed with an 'i'—as in i386. Correspondingly, 64-bit programs are often designated as x64, x86_64, and Win64. Since AMD's 64-bit extensions to the 32-bit ISA were ultimately victorious over Intel, some programs are attributed with the label AMD64 to denote 64-bit. However, returning to the 64-bit architecture, it's worth noting that in 2005, AMD lodged an antitrust lawsuit against Intel. The dispute was not resolved until 2009, but one outcome was Intel securing a royalty-free perpetual license for AMD's 64-bit architecture, which Intel reciprocated by giving AMD the same for Intel's 32-bit architecture. This is how Intel gained access to AMD's 64-bit, backwards compatible extensions. I really appreciate this, as that means we don’t have to deal with incompatible ISAs from the competing CISC brands today.

With the jump to 64-bit registers, our computers can handle 16 exabytes of memory. Considering that most common consumer grade DDR5 sizes are 8GB, 16GB, and 32GB (with some single sticks going up to 64GB) and the average ATX motherboard only having 4 ram slots, we are only at a fraction of what are current CPUs can handle. That hasn't stopped Operating Systems from avoiding the mistake of assuming we will be on 64-bit forever. Some Operating Systems have already started working on updating code to work with 128-bits. With that being said, here are the specs of a 64-bit computer using some data from the Steam Hardware survey. Maybe your PC looks similar to this :)

CPU: 6core ~2.5Ghz

Ram: 16GB

Storage: 500gb - 1Tb SSD

Not fully reflected in the specs is how fast everything has gotten. Our Ram is now up to its fifth generation with DDR5 timings playing an important role in CPU performance. Solid state drives (SSDs) have become commonplace in most setups, overtaking hard drives, with some setups boasting multiple SSDs instead of a hardrive + SSD. Although the fastest CPUs don't exhibit substantially superior clock speeds compared to the fastest 32-bit counterparts, the proliferation of cores and advancements in instructions per cycle (IPC) have significantly augmented their performance, surpassing that of older CPUs operating at similar clock speeds.

Impact on Software and Applications

The move from 8-bit to 64-bit architectures didn't just affect hardware; it also had a profound impact on software development. Software had to be adapted to take full advantage of the enhanced capabilities offered by the ever increasing power of these processors. This transition gave us Branch prediction, SIMD, L1, L2, and L3 cache, and many other improvements. This also allowed general purpose, garbage collected languages to become more popular, as they could now be run desktop applications at reasonable speeds.

Operating systems, applications, and games had to be re engineered to harness the benefits of these new advances in computing architecture. When the PlayStation 5 came out, it relied its Ultra fast SSD to increase the size and scope of its games without creating additional long loading times.

But this greedy consumption of system resources by software hasn’t always been met with praise by other developers. Since at least the 80s people have complained about software eating up all the performance gains of hardware. This has led to what is now known and Andy (Andy Grove former Intel CEO) and Bill’s (Former Microsoft CEO) law.

what Andy giveth, Bill taketh away.

One of the amazing things about programming and computers is that a lot of people who played an impactful role in advancing it are still alive today. I’m amazed to see what progress we’ve made in such a short time, and I’m looking forward to where we will be in the decades to come.

Call To Action 📣

If you made it this far thanks for reading! If you are new welcome! I like to talk about technology, niche programming languages, AI, and low-level coding. I’ve recently started a Twitter and would love for you to check it out. I also have a Mastodon if that is more your jam. If you liked the article, consider liking and subscribing. And if you haven’t why not check out another article of mine! Thank you for your valuable time.

itanic the first 64-bit processor architecture? PA-RISC and SPARC and MIPS and POWER would like a word...