Twas the night before Christmas, when all through the house

Not a creature was stirring, not even a mouse;

Except a man at his computer,

Who dared to try;

to make a language that would change lives,

Not even he knew what it would be;

Not one version, or two, but three

I imagine that’s how it went over the Christmas break in 1989 when Guido van Rossum started on his hobby project. What is to say about Python that hasn’t already been said? It’s one of the most popular languages in existence, the first language I really learned how to program in, and one of the biggest names in the machine learning space. By all accounts it should have failed to gain the popularity it did. Perl was already a few years ahead having had its 1.0 release in 1987, and would become deeply entrenched in the Unix, CGI, and early bioinformatics space.

It has the dreaded Global Interpreter Lock (GIL) 😱 and it was so slow even compared to other dynamically typed languages. It also fractured its community with the transition from Python 2→3. To this day there are people who refuse to touch it after the transition. But somehow it still managed to be successful in spite of its shortcomings.

I was on the cusp of the Python 2→3 transition being the last class in my grad school to learn Python 2, so I remember being put off by Python 3. By then the transition had been going on for almost a decade so a lot of libraries had already been updated. But the final date for Python 2 was no where in sight. I was lucky that my transition was relatively painless, but it wasn’t for a lot of people. Lets take a look back and see how it started, but first a little housekeeping.

A Brief history of Text Encoding 📚

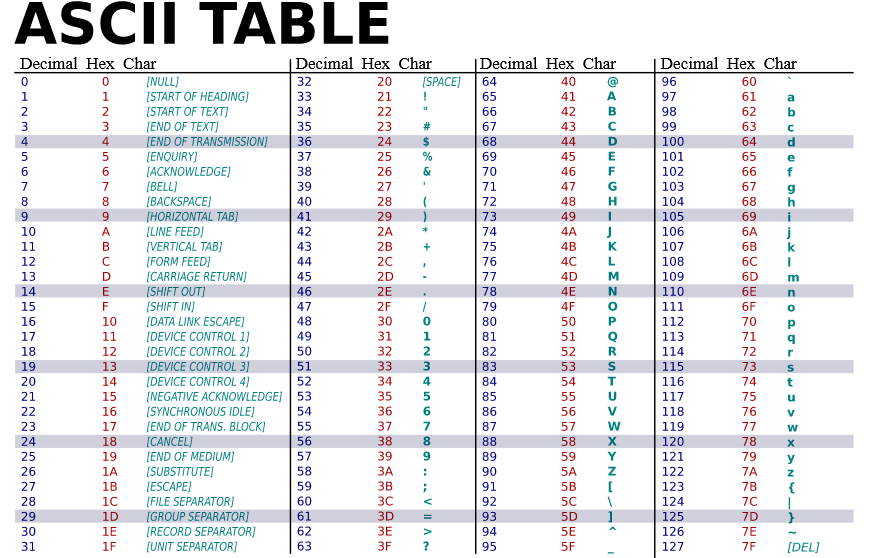

Before we can continue I’d like to talk about text encoding. It’s a complicated subject but I’ll do my best to summarize. Computers only understand 1s and 0s, so the letter ‘A’ is meaningless to it. Therefore for the computer to operate on text it needs to be able to convert letters in an alphabet to binary numbers. One of the first popular standards was ASCII (American Standard Code for Information Interchange). It was introduced in the 1960s and used 7 bits to represent text. This allowed it to represent 128 characters which I’ve listed below…

This was fine and dandy if you only used the 26 letters of the alphabet, spoke English, and wrote in standard prose, but left out the majority of the world. There were no Greek letters, and other special symbols which made communication in specific domains difficult.

The International Organization for Standardization (ISO) created an encoding standard in 1987 called ISO-8859-1, known as Latin-1. It extended the amount of characters that could be encoded by adding 1 more bit. This allowed 256 different characters to be represented instead of 128. This was the era of 1 byte character encodings, where the same 256 values would be mapped to different letters depending on what character encoding set was being used.

In the 1980s, Unicode was invented as a way to encode text using a variable number of bytes. There are three standards that are used. UTF-8, UTF-16, and UTF-32. There are 2²¹ (2,097,152 values🤯) code points that can be stored using Unicode, giving us lots of space to create new emojis 😉. Of the three encodings UTF-8 is the most popular. It allows values to be encoded using a variable length, with the most common characters encoded in 1 byte and everything else taking 2-4 bytes. If you are interested in learning more about Unicode I highly recommend this video by Studying With Alex. It breaks down Unicode in a much more visual way and he explains it all very thoroughly.

The Split 💔

Now that we have some understanding of text encoding, the problems with Python 2 will make more sense. Even in the early 2000s Pythons warts were becoming apparent1. The core maintainers so far had been able to fix the issues by evolving the language, adding new features and deprecating old ones. But with the rise of Unicode, and programmers from all areas of the world wanting to work with strings in their native language, the decision for Python's strings to not use Unicode by default was hurting it. There was also a desire to clean up parts of the language and remove certain features2. Since the transition was going to break a lot of things anyway, some people wanted even more things fixed like concurrency3.

To track proposals to changes in Python, Python uses the PEP process (Python Enhancement Proposal). The maintainers decided that all PEP proposals related to Python 3.0 would start in the 3000s, and the first, one PEP 3000 was created April 5th 20064. In this PEP, Guido would write the fabled words that give people nightmares even to this day...

There is no requirement that Python 2.6 code will run unmodified on Python 3.0. Not even a subset. (Of course there will be a tiny subset, but it will be missing major functionality.)

And this is the major rub that people had with the transition in the first place. At the time of writing Python 3.11.1 is the current version of Python. In 2023 no one can argue that Python 2.7 is the better language, but on December 3rd, 2008, when Python 3.0 was first released that wasn't clear5. When people started attempting to make the transition from 2→3 the problems with the all or nothing approach became readily apparent. Lots of Python 2 code just wouldn't work in Python 3, and sometimes needlessly so. For example, it wasn't until 2012, with PEP 4146,that the 'u' prefix was allowed back into the language, greatly reducing the amount of incompatible lines between Python 2 and 3.

Many also felt the tool that Python 3 shipped to help convert Python code from 2→37 was inadequate. Especially considering it didn't help with Unicode one of the major reasons for the version change in the first place. One of the biggest reasons for this is that it provided no straddling code, ways to write code that worked in both versions, to ease the transition. Community members soon stepped in to fill the gaps. Six8 was a library that acted as a compatibility layer between Python 2 and 3. This allowed people to write code that worked in both versions, giving maintainers of large code bases an easier time porting their code over. Python-Future9 was another library which came with a variety of tools to backport features into Python 2 and transition code from Python 2→3. But even with all these tools, and the new features in Python 3, the pace was glacial.

Four years into its life, people were not adopting the language10. Eight years into its life, Python 3 was still slower than Python 211 which hurt, considering it was already known to be one of the slowest dynamically typed, garbage collected languages. Eventually a date was set, and on January 1st, 2020 Python 2 was sunset12. But many maintainers, due to large and interwoven dependencies waited late into Python 2s lifetime to make the changes.

Guido even admitted in his Python 2→3 retrospective (2018) that he was still struggling with the Python 2→3 transition at Dropbox13 due to the enormous quantity of code they had. Today in 2023 if you look hard enough, you will still find examples of Python 2 in the wild. Maya is still shipping with a Python 2 interpreter14, RHEL still supports Python 215, as well as tools like ArcGIS16.

At this point dear reader you might be inclined to say “Is Python a dead language?” and the answer is no. It’s actually more popular than it has ever been17. It managed to do what Perl 6 (now Raku) could not, survive a polarizing split in the community. But there was a cost.

What did it cost 🪙?

There is a human cost to every decision, even in software. The transition from Python 2→3 left a scar in many peoples minds18. Some people have vowed to never touch the language again because of it. Guido himself began to feel the toll as he received more and more push back from the community, making it much less enjoyable for him to work on the project. He ultimately resigned as Benevolent Dictator For Life (BFDL) on July 12th, 201819, citing PEP 57220 as a big reason why. But in pulling off the transition, and becoming successful Python 3 proves the old Bjarne Stroustrup adage

There are only two kinds of languages: the ones people complain about and the ones nobody uses

The machine learning wave definitely contributed to the popularity, but Python had seen a huge investment before that despite what some people considered major shortcomings, the gil, the garbage collector, white space, its slowness etc. I think that speaks to the language making most of the right tradeoffs over its lifetime. One thing I’ve noticed is that with programming becoming more popular not everyone gets into it because they love the theory, or want to know how computers work. It’s just a tool to get stuff done. There’s nothing wrong with that, but very few languages make it as easy as Python. Does this mean we will still see Python in thirty years? I think so.

This doesn’t excuse the fact that the community had to step up to ease the transition when the maintainers failed to provide adequate support. With that being said as someone who has never had to manage a programming language for nearly 30 years, I’d like to end this post with a quote from Anton Ego from Ratatouille.

In many ways, the work of a critic is easy. We risk very little, yet enjoy a position over those who offer up their work and their selves to our judgment. We thrive on negative criticism, which is fun to write and to read. But the bitter truth we critics must face, is that in the grand scheme of things, the average piece of junk is probably more meaningful than our criticism designating it so.

Call to Action📣

If you made it this far thanks for reading! I’m still new here and trying to find my voice. If you liked this article please consider liking and subscribing. And if you haven’t why not check out another article of mine!