The inaugural OpenAI DevDay recently concluded, bringing with it a slew of new announcements about OpenAI's products. The event kicked off with enthusiasm as Altman highlighted the incredible journey the company has embarked on since the release of ChatGPT in November 2022 and GPT-4 in March 2023. To underscore OpenAI's impact, Altman proudly showcased nine companies that have successfully integrated ChatGPT enteprise into their operations

Amgen

Bain and Company

Lowe’s

PWC

Klarna

Carlyle

Square

Shopify

jetBlue

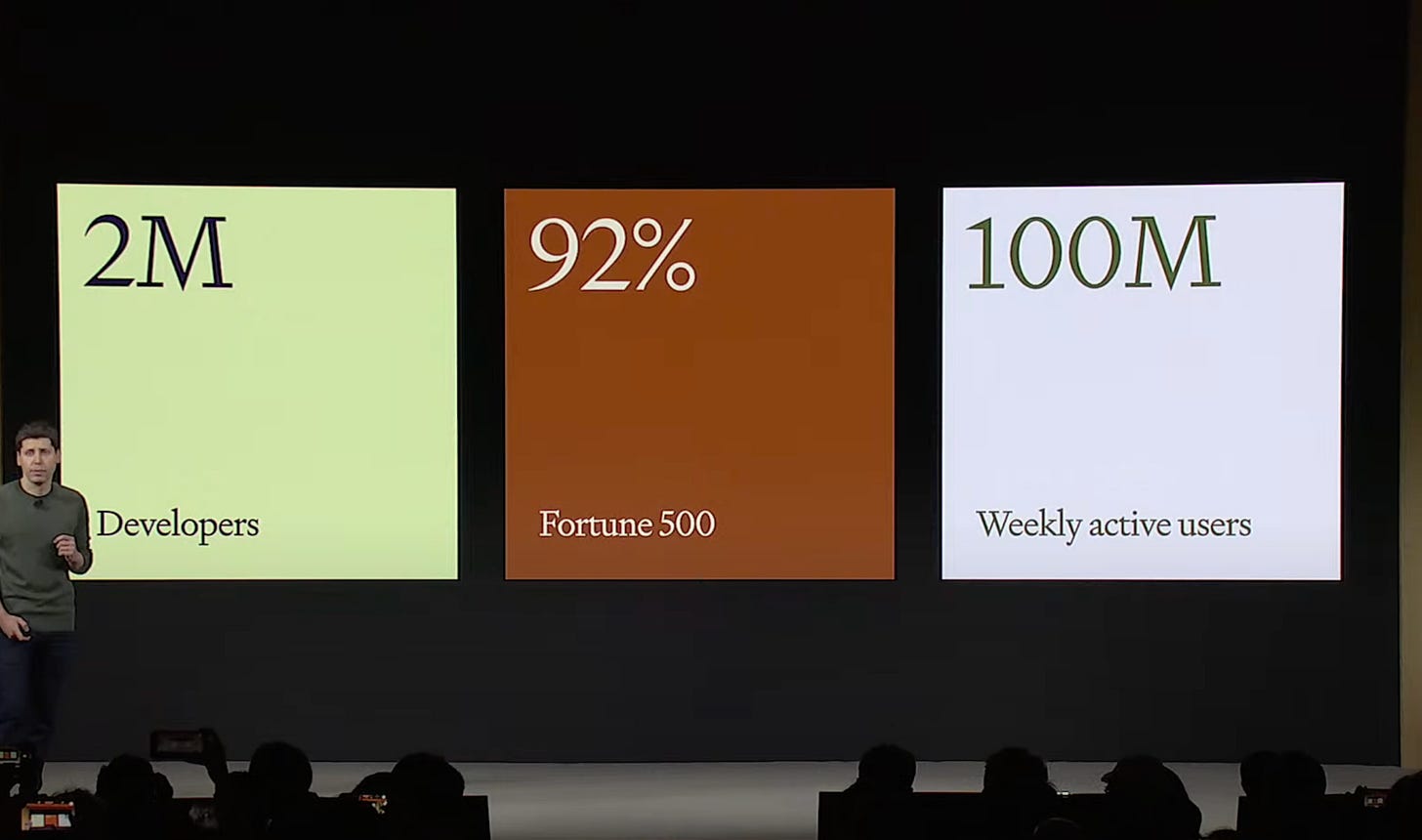

But it didn’t end there. He showed some impressive numbers for the users of the API, companies using their services, and users of ChatGPT

The one particular figure catching my attention was the 92% number. Over 92% of Fortune 500 companies are building using their products. This is impressive, considering how conservative the corporate world tends to be when adopting new technologies. It just shows that if you have a product that is innovative, it doesn’t matter how new and untested it may be. I’m sure Microsoft leading the charge has helped get other companies on board though, as many Fortune 500 companies are running on Microsoft’s cloud platform, Azure. All of this corporate interest bodes well for people who are getting into LLMs while it’s still early. I’m confident if these companies are building with these technologies, they will be hiring for people with existing experience using these technologies. The sooner you can build that portfolio the better.

Altman then turned the spotlight on the latest addition to OpenAI's model lineup, GPT-4 Turbo

He highlighted 6 key areas that the new model addresses

Context Length

Context Length - 128K

More control

Json mode

Better function calling and following instructions

Reproducible outputs seed parameters

Better World Knowledge

Knowledge Up to April 2023

New Modalities

Dalle-3, GPT-4 with Turbo, and new Text to speech model going into API today

GPT-4 turbo can accept images as inputs through the API

Release new model of Open source speech recognition model

Customization

Fine tuning has been expanded to the 16k version of the model

Applications for GPT-4 fine tuning

Custom models. Researchers work with company to build a custom model

Higher Rate Limits and Cheaper prices

Doubling tokens per minute for GPT-4 customers

GPT4 -Turbo cheaper than GPT 4 by 3x for prompt tokens and 2x for output tokens. ~2.75x cheaper for most customers

Decreasing GPT-3.5 Turbo 16K. Down to .003 per 1k input tokens and .004 per 1k output tokens

View log probs in the api (soon)

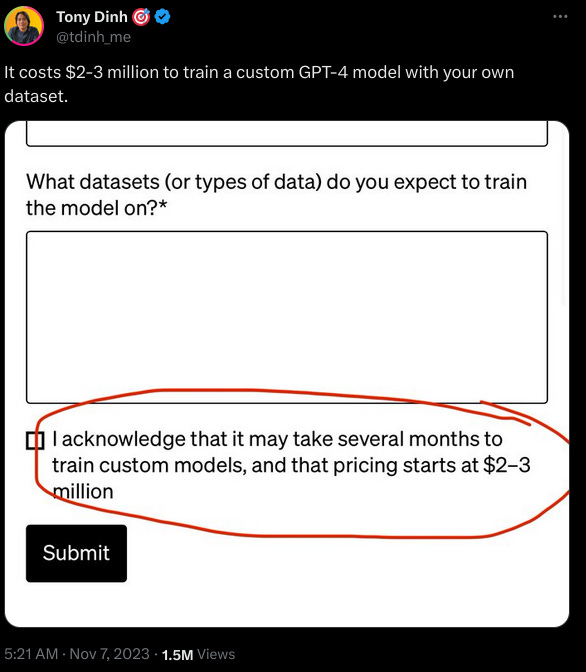

They focused on these areas as this was some of the loudest developer feedback they got. I know I do for finetuning. I frequently get questions asking about training GPTs on specific datasets to improve accuracy. I almost always steer people away because that is a laborious and expensive undertaking. You can usually get away with just using better prompts and Retrieval Augmented Generation (RAG). But if you have deep pockets Tony Dinh has found a number in a form that should give you some idea of the cost.

A custom trained model can be yours for the low low price of $2-3million. I don’t know about you but that is too rich for my blood. I’ll continue to advocate for alternative solutions to improve accuracy

Besides these 6 areas, Sam also announced Copyright Shield to help users who might experience legal trouble when using ChatGPT enterprise or the APIs. I’ll let Sam’s words speak for himself here

Copyright shield means that we will step in and defend our customers and pay the costs incurred, if you face legal claims or on copyright infringement, and this applies both to ChatGPT Enterprise and the API

He then followed up to mention…

Let me be clear, this is a good time to remind people do not train on data from the API or ChatGPT Enterprise ever.

Which is a good reminder that this implicitly means that ChatGPT and ChatGPT plus are used to improve the model, so you should be careful about what you say to it.

Copyright Shield is an interesting solution to the fear that many people have when using LLM tools. Taking it on its face value it seems to be saying “We are so confident that legal issues using our models will be so low, we will put our money where are mouth is”. This will reassure a lot of people, but considering that this only covers the 92% of fortune 500 companies, and 2 million app developers using their products, it really is only a small group of people. Remember Altman said that they have 100M active weekly ChatGPT users. These users will not be covered.

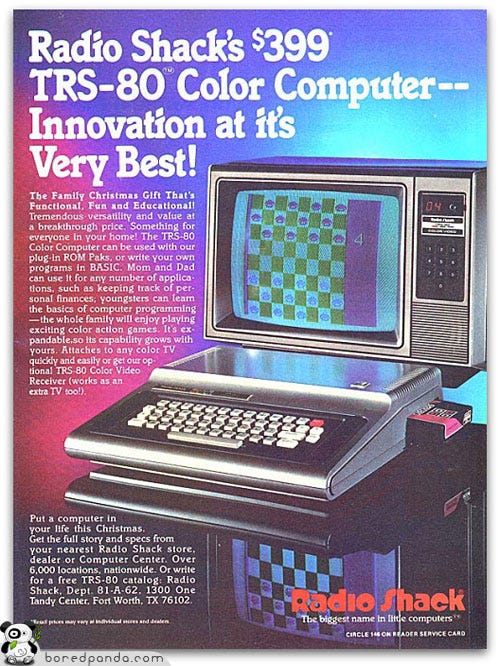

Moving on Sam mentioned how they focused on price first when thinking of ways to improve the model, with performance coming later. I think this is the right call, and draws parallels with the 8-bit computers of old. People were more excited about their affordability, than their high performance in the beginning.

It continues to amaze me how cheap it is getting to use these technologies. Altman says that the GPT-4 Turbo 128K context will be able to ingest 300 pages of a standard book in tokens all at once. That’s a huge amount of text. At that current pricing, ingesting an entire book would cost 38¢. But if you don’t need to consume a lot of tokens all at once, other models like GPT3.5 Turbo might suit your needs better. Only racking up a bill of $1 to consume 1 million tokens worth of data in 4K token chunks, or $1 to output 500,000 tokens it’s practically a steal. I can’t stress enough how many problems don’t require numbers anywhere near that size of tokens. It took me a long time to reach that number using the API and that was on the old pricing.

OpenAI's dedication to competitive pricing aims to narrow the gap that simpler local models could potentially fill. Local models are much more difficult to set up, require some beefy hardware, even for small contexts, and perform worse. While local models have their place and are continually evolving, OpenAI's solutions offer better results for most users, given their ease of use and performance advantages.

Next up Sam brought on Satya Nadella to talk for a bit about their partnership, but other than saying that they are all in on large language models, not much more was said. I’m sure Microsoft is happy to just be seen as a trendy innovative company again.

Sam Altman then turned his attention to ChatGPT, which also got some much needed love. For most people this is still the best way to interact with large language models. It now uses GPT-4 turbo with all the latest improvements, including the new knowledge cutoff. It can now browse the web, write and run code, and take in and generate images. Its interface also got a face lift. Finally, ChatGPT will pick what model to use based on the question asked, per user feedback. No more switching between GPT-3.5 and GPT-4

A new feature Altman introduced for the ChatGPT interface is GPTS. GPTS are tailored version of ChatGPT for a specific context. They combine instructions, general knowledge and actions, allowing them to be more helpful in a variety of situations. If you’ve been working with these models before, you know that with some clever prompt engineering you can constrain the context of the outputs of these models. This reduces hallucinations and improves the quality of outputs. Of the canned solutions OpenAI will provide, the GPT I’m personally interested in is Game Time, as I’ve been learning card games like Cribbage and Gin Rummy, and they are difficult to master.

Next up in the presentation, Sam brought Jessica Shieh to demo Zapier AI actions, and showed how she could use the GPT to send an update to her calendar, and then notify Sam of the conflict on his phone.

After Jessica’s Demo Sam came back to show off how to create a GPT from scratch. He stressed how this could be done entirely with language, with no coding involved. His GPT gave advice to startups based on information he had given in his lectures, from speaking to YC startups.

Altman then showed off the GPT app store, where popular GPTS could be browsed and searched for. The most successful ones would get a revenue share with OpenAI, but no specifics where given as to how that would work out. He said it would be coming soon in the next couple of weeks

Finally Romain Huet, the head of developer experience took the stage for one final demo. This time it was the new Assistants API. Lots of apps that leverage GPT are AI Agents and Altman referenced

Shopify’s Sidekick

Discord’s Clyde bot

Snap’s My AI

As someone who has worked with AI agents before I know they can be difficult to set up, a point he also highlighted. The Assistants API seeks to be a simpler way to set up these agents. Romain demoed an App called Wanderlust, which was a travel app. With just a name and some initial instructions he was able to create an AI agent that could be integrated seemlessy into the app. This new API will cover a lot of simple use cases for many app developers and I’m excited to see it powering more websites

Finally Altman took the stage one last time. He congratulated the team for all their hard work and ended with this.

What we launched today is going to look very quaint relative to what we’re busy creating for you now

It was one part exciting and ominous. It’s very clear that OpenAI want to firmly establish themselves as the Goto in the LLM market before any other competitors can gain a foothold. Their aggressive pricing and the birth of the GPT store are two parts to that plan. But it’s hard to blame them, they continue to amaze people with how many new an innovative products they are able to pump out each quarter. They’ve gotten many conservative corporate companies to integrate their products into their tech stacks. With powerful models like GPT-3.5 Turbo and GPT-4 it is almost a given though, as it is like a fusion reactor for new ideas. This was a big day for OpenAI and if Altman’s correct, this is only just the beginning.

Call To Action 📣

If you made it this far thanks for reading! If you are new welcome! I like to talk about technology, niche programming languages, AI, and low-level coding. I’ve recently started a Twitter and would love for you to check it out. I also have a Mastodon if that is more your jam. If you liked the article, consider liking and subscribing. And if you haven’t why not check out another article of mine! A.M.D.G and thank you for your valuable time.

How to Fine-Tune Your ChatGPT Interactions for Better Results

ChatGPT is one of the most accessible ways to work with Large language Models (LLMs). It is specifically designed to be interfaced with in a conversational manner. This is extremely useful for a wide variety of tasks, but as your needs become more specific, the conversational nature of the model can be cumbersome. Thankfully these models can be customiz…