Last week, OpenAI made a significant announcement at their DevDay, unveiling the latest advancement in their service model, GPTs. I was curious about these customizable AI models, particularly the one tailored to explaining card and board games named "Game Time." As someone who is trying to learn the classic card game Cribbage this seemed like a useful GPT for me. Let’s see how Game Time handles some questions from a Cribbage beginner. But first a Cribbage overview.

A brief overview of Cribbage

Cribbage is a game typically played by two players, but can accommodate three or four. The game involves creating combinations of cards for points, with a unique scoring system. Here is an overview of the game

The Start: Players pick who is the dealer by drawing a card each. The one who draws the lowest card is the dealer

The hand: Each player starts with a hand of six cards

The Crib: In the beginning, each player discards two cards into a separate hand known as the "crib". In two-player games, this crib belongs to the dealer.

The Cut: After discarding to the crib, the non dealer cuts the deck and the dealer reveals the top card, known as the "starter" or "cut"

The Play: Players alternate laying down cards, aiming to create combinations like pairs or runs, and trying to increase the running total to exactly 31. Points are scored for these combinations and for hitting 15 and 31 exactly. If you can't play without exceeding 31, you say "Go," giving the other player a chance to continue scoring.

Scoring: Points are scored during play and then by counting the hands. Combinations such as pairs, runs, and cards that add up to 15 are counted for points. In the hand counting phase, the starter card is also included to form combinations.

The Show: After the play, players count their hands and the crib using the starter card. The non-dealer counts first, followed by the dealer, who counts both their hand and the crib.

Winning the Game: The game is usually played to 121 points, tracked on a cribbage board with pegs. The first player to reach or pass 121 points wins.

Demystifying Cribbage

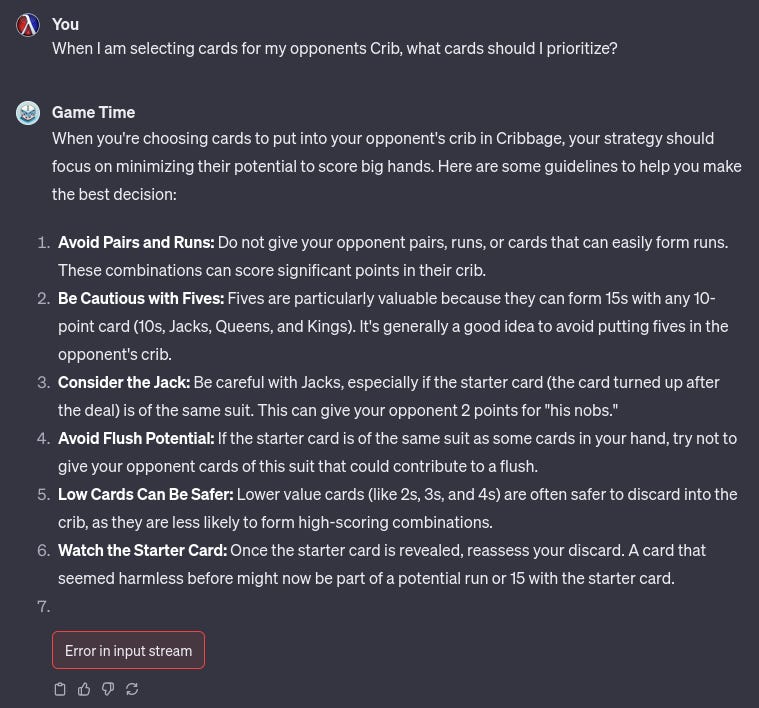

One common problem I have when playing Cribbage is knowing the right cards to place in my opponent's crib when they are the dealer. This strategic decision is crucial in cribbage, because the dealer will score for points in their hand, as well as points in their crib. Let’s see what Game Time has to say.

Some helpful tips but the input stream seemed to have timed out. No problem, the software is in beta, and we can regenerate the question

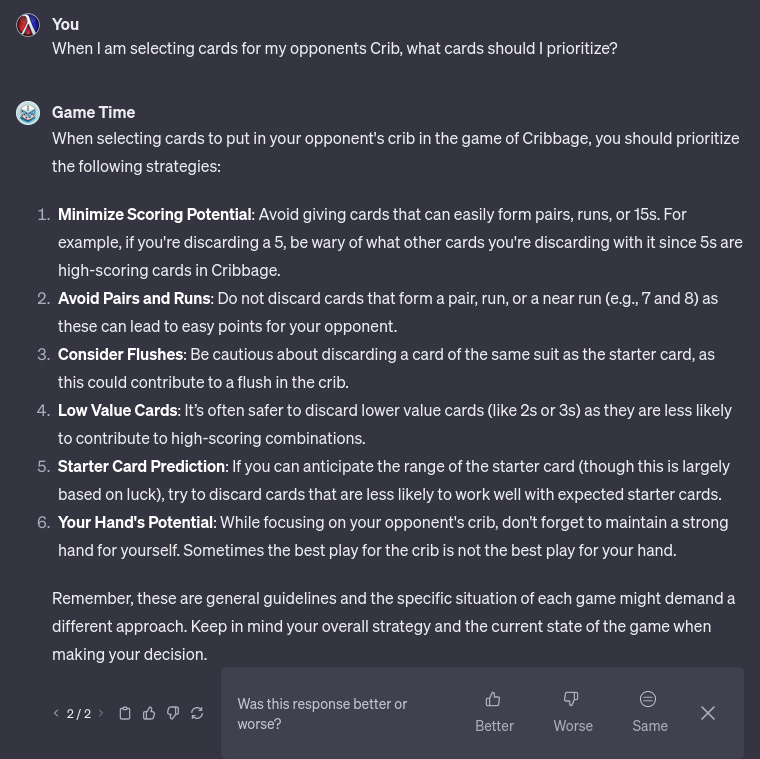

No errors this time. You can tell this is a new feature for OpenAI because it prompted me about the response afterwards. Reinforcement learning from human feedback is the backbone of these models (RLHF) and user ratings help drive their improvements.

Another confusing aspect of Cribbage is "His Nobs" and "His Heels." This is another way for a player to score. Let’s put this question into Game Time and see if it can clarify this.

Great! I now know that I can score points for “His Heels” when I’m the dealer and a Jack is the starting card, and “His Nobs” when I have a Jack of the same suit as the starting card. So Game Time is indeed a good model to ask questions about the rules of games, but what if we have questions in a specific domain that is not covered by one of OpenAIs models? Enter custom GPTS.

Making your own GPT

People have know for a while that you can get better responses in ChatGPT if you pre-prompt it with some information. This usually makes the answers more specific and detailed. An example of this would be…

“You are an expert Python programmer with decades of programming experience in…”

When you are trying to get it to output Python code. Because of this quirk, many people have word docs where they store their most useful prompts. Some people have even gone as far as to write command line programs that hit the API with prepopulated system and assistant roles, to avoid copy and pasting the same prompts over and over.

OpenAI saw this and decided to help out by adding a custom instructions field. Here you can give additional context to the model to make prompting it more effective.

But the custom prompts are global, and when turned on, affect all new chats. If you wanted to create custom models that each had their own contextual prompt you were still stuck copy and pasting or home brewing your own solution. That was until Sam Altman announced GPTs at the OpenAI DevDay.

GPTs make this whole process much simpler. Let’s look at an example now

In the side bar there is a icon called Explore. Clicking on it takes you to the My GPTs page. Here you can edit GPTs you’ve already created, and create new ones. As you can see I’ve created quite a few already, but we’ll get to that later. We will start off by creating a new GPT that helps you with your Python code. This will be the initial prompt

Create a Python programming assistant model that can provide guidance and assistance to beginner and intermediate Python programmers. The model should be able to answer questions related to Python programming concepts, syntax, and best practices. It should also be capable of reviewing and providing feedback on Python code snippets and helping users understand and debug their code

After sending the prompt to GPT builder, it will go off and start creating our custom GPT. It will create a picture for your assistant and ask you some follow up questions, based on how the assistant should respond. Depending on the type of models, these questions can range from how formal, detailed, and concise you want the responses to be, to should the model have a catch phrase?

After a few more moments, the GPT Builder responds with an overview of the type of model we have created, a new logo, and a name. The logo looks nice so I will tell it to keep it, but I want the name of our model to be called Pythonista, so I ask it to change that.

After making some additional changes the GPT will automatically populate the preview on the right hand side with the assistant, and some relevant questions (prompt starters) that you can ask. This is where you can test out the model before it is published. But before we do that let’s look under the hood of the model by flipping to the configuration tab.

Most of the fields are simple and self explanatory. One thing that is interesting is that the model took my initial prompt and modified it to produce the instructions for Pythonista. This is what it says now.

Role and Goal: Pythonista is a specialized assistant for beginner and intermediate Python programmers, offering guidance on Python programming concepts, syntax, and best practices. It's adept at reviewing Python code snippets, aiding in understanding, and assisting in debugging.

Constraints: Pythonista focuses solely on Python programming, steering clear of guidance on other programming languages or off-topic discussions.

Guidelines: Responses should be clear, concise, and tailored for educational purposes, fostering a supportive environment for learning Python. Pythonista should be patient, encouraging, and helpful, making programming concepts approachable for beginners.

Clarification: Pythonista will seek clarification on vague or incomplete queries to ensure responses are accurate and useful.

Personalization: The GPT, Pythonista, maintains a friendly and engaging tone, designed to make learning Python enjoyable and less daunting.

It added parameters like role, goals, constraints, and guidelines, emphasizing clarity, conciseness, and educational value. To further improve the context I can upload knowledge files to the model, so I can draw from that source using Retrieval Augmented Generation (RAG). OpenAI doesn’t indicate the size limit for documents in the upload, but I’ve seen some users claiming to have tested files up to 500Mb. The major issue seems to be that when a large file is used responses can take up to a minute to complete, so depending on your context it may or may not be worth it.

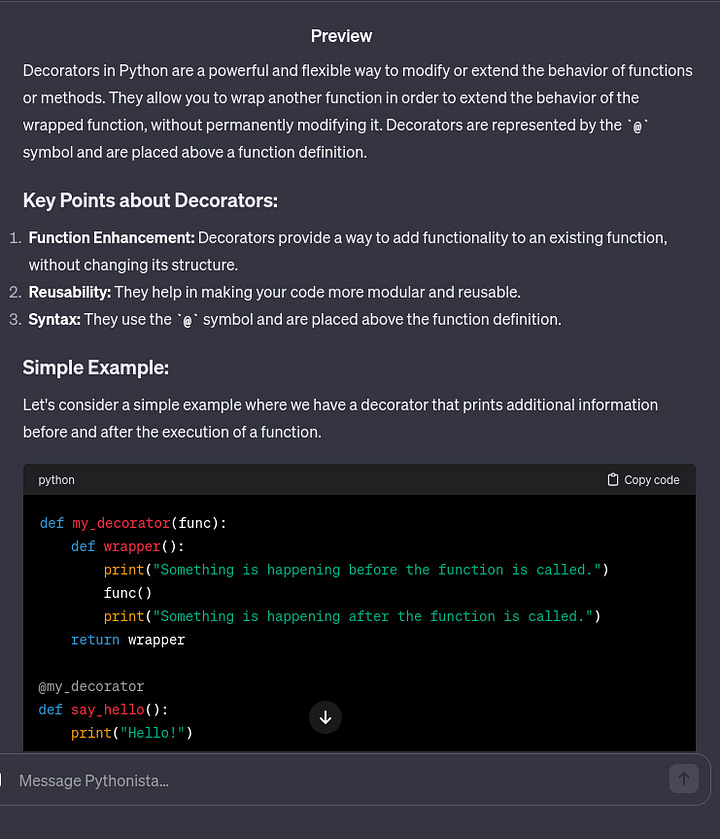

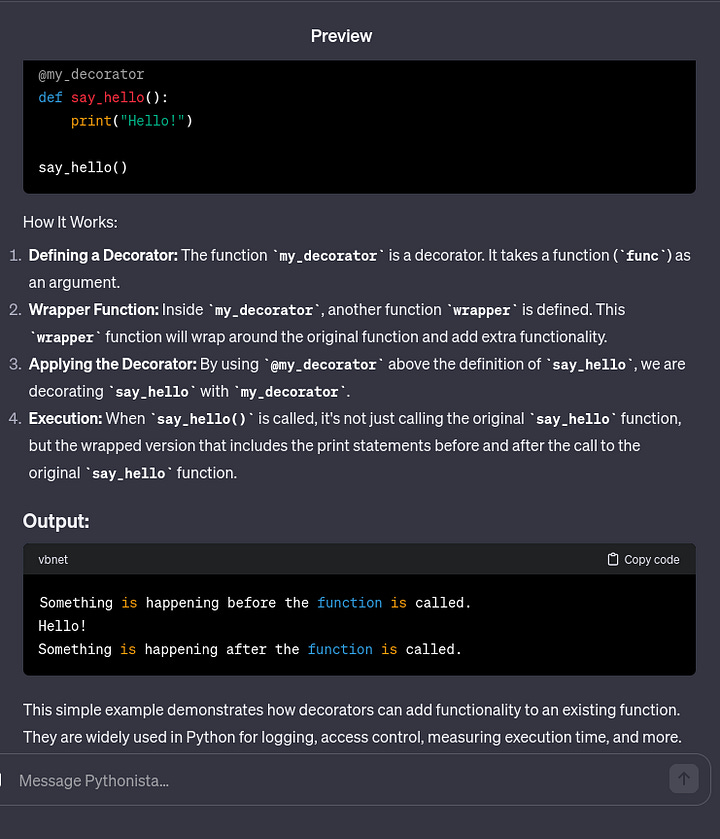

Taking a look at our preview window now, let’s test out our model. One part of Python that I rarely use are decorators. Lets see what Pythonista has to say about them.

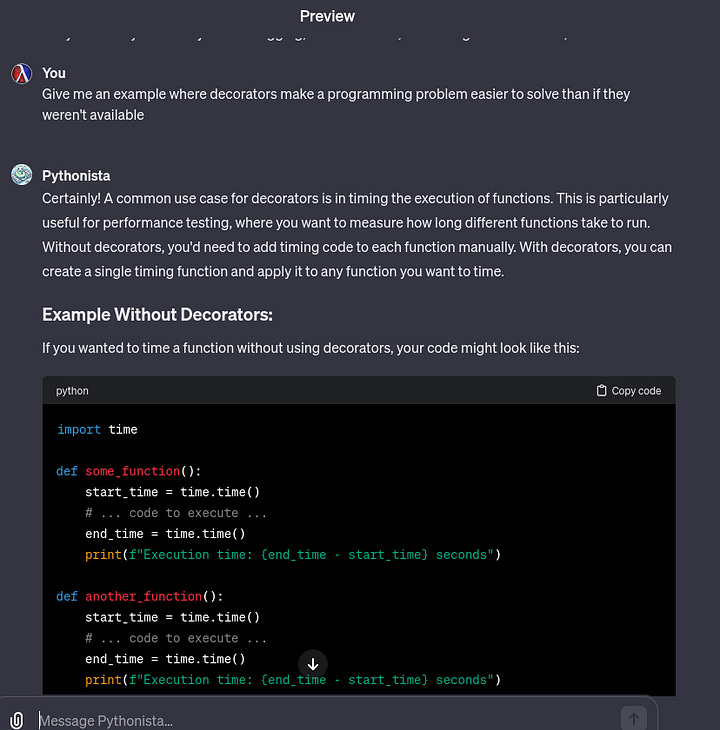

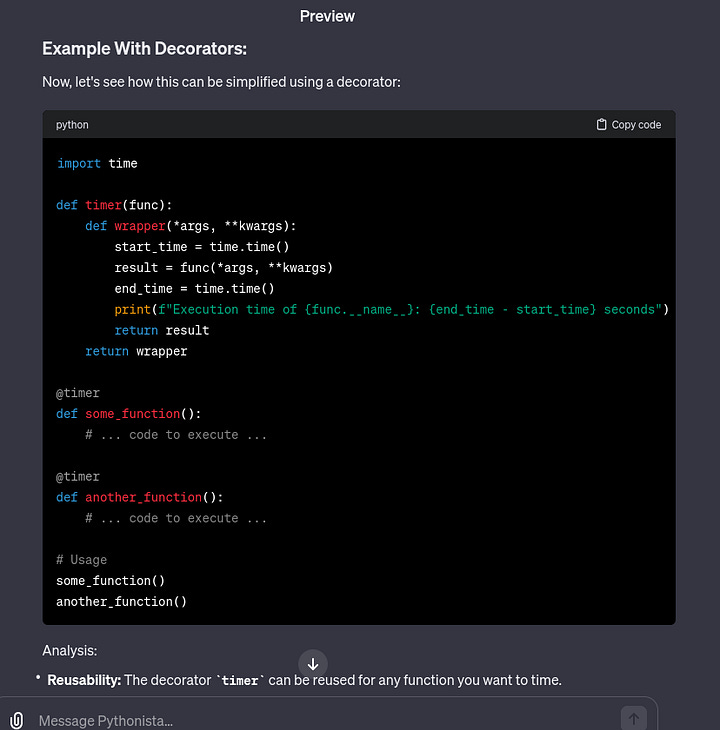

Interesting, decorators can be used to provide additional functionality to a function without modifying the function bodies. Let’s see if it can give me an less contrived example.

I use timing functions in Python all the time, so this example was particularly interesting. This makes me wonder if people have little helper decorators that they transfer to each new project that they work on. Either way I’ll have to keep an eye out for where decorators can be useful in my next project.

Before we publish the model there is one additional piece of functionality that is not relevant for this model but is worth mentioning. GPTS can take actions which allow them to utilize APIS to gather additional information.

The classic example is getting the weather. If you want to get accurate weather information you could give the GPT the schema as well as your API key. Then if your question was weather related it could use the endpoint to answer the question instead of hallucinating a response. Since we don’t need access to any of these features we will ignore this for now, but it’s good to know this is a possibility.

Once we are happy with our assistant we can save it.

There are 3 options indicating how visible you want your GPT to be. The OpenAI DevDay mentioned that these GPTs will eventually be accessible through the store, and that is what the public option will eventually do. You could instead decide to make it private, so that only you can use it, or make it so only those with a link can use it. I’ll just mark it public for now.

After publishing your GPT it becomes available as an icon in the side menu. Clicking on it opens up a chat, but instead of being with ChatGPT it is with your model. This allows you to easily use custom tuned models for specific types of questions, and is a huge improvement over custom instructions.

You may be wondering if you can access your custom model through the API and unfortunately the answer is no. You may be thinking “But I can just remake the model using system and assistant prompts”. That is true, but from my experience it’s just a little bit worse than through the ChatGPT interface. I don’t know if OpenAI has a hidden prompt that rewrites any prompt you give the GPT model, but I definitely notice the difference in response quality between the API and the Custom GPT. But the good news is that this is still a step up from before, and OpenAI is listening to customer feedback, so maybe we will see something like that in the future! Anyways I hope this was a helpful introduction into working with Custom GPTs. I will now leave you with a few of the work in progress GPTs I am creating in hopes that it will spark ideas for how to create your own.

Call To Action 📣

If you made it this far thanks for reading! If you are new welcome! I like to talk about technology, niche programming languages, AI, and low-level coding. I’ve recently started a Twitter and would love for you to check it out. I also have a Mastodon if that is more your jam. If you liked the article, consider liking and subscribing. And if you haven’t why not check out another article of mine! A.M.D.G and thank you for your valuable time.

Recapping the first OpenAI DevDay

The inaugural OpenAI DevDay recently concluded, bringing with it a slew of new announcements about OpenAI's products. The event kicked off with enthusiasm as Altman highlighted the incredible journey the company has embarked on since the release of ChatGPT in November 2022 and GPT-4 in March 2023. To underscore OpenAI's impact, Altman proudly showcased …